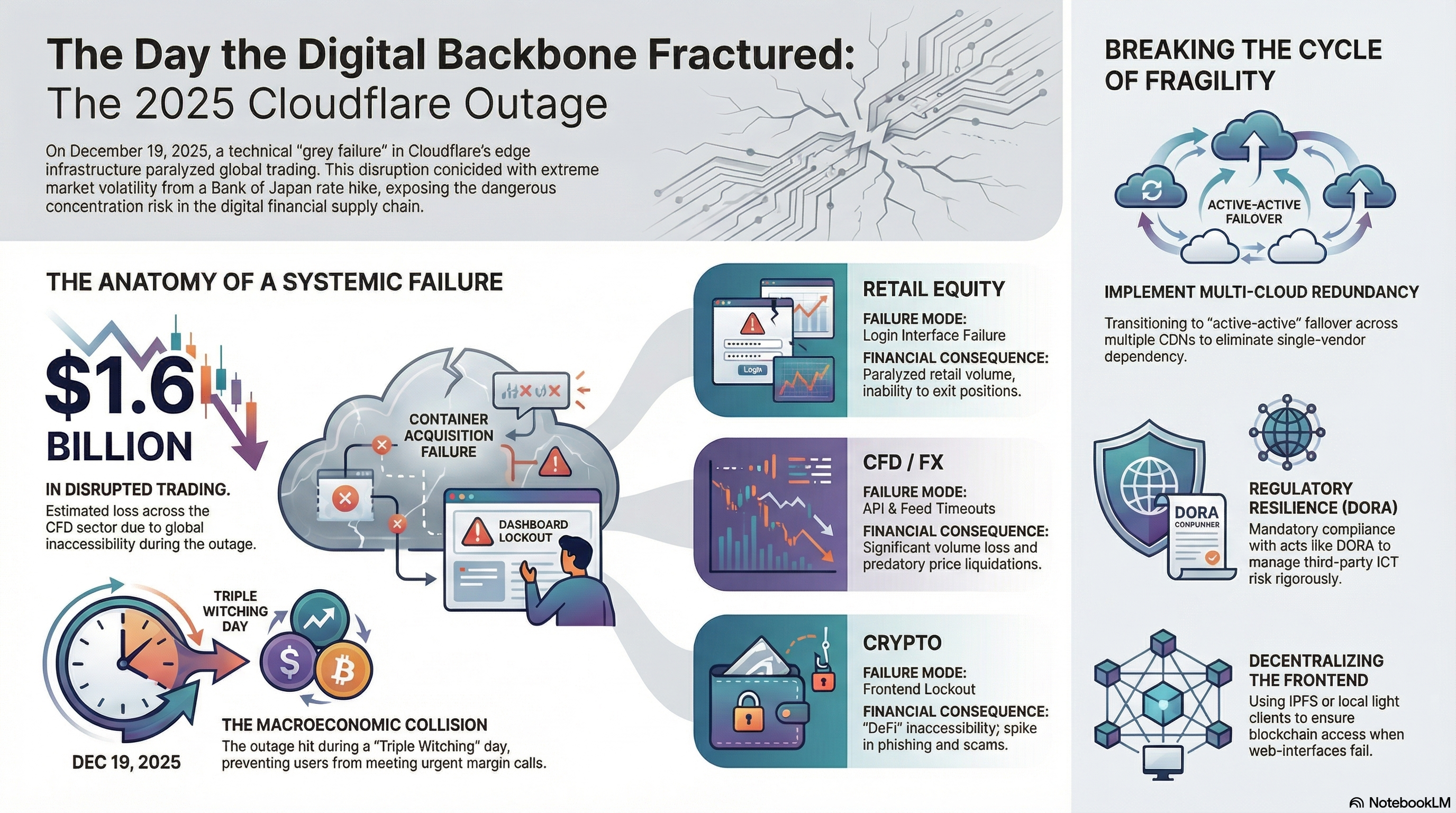

Cloudflare outage and how it exposed systemic fragility in global financial markets, trading platforms, and crypto infrastructure

On the morning of December 19, 2025, the internet did not collapse in a dramatic, cinematic way. No single switch was flipped. No ominous “offline” banner appeared across the web. Instead, something subtler and far more dangerous happened. The digital world began to stutter. Pages loaded halfway, buttons refused to respond, dashboards froze as if thinking too hard. For most users it felt like irritation. For global financial markets, it felt like suffocation.

Listen to 15 minutes of a podcast talking about the case:

The first whispers came from YouTube. A few unexplained 502 errors. Streams buffering endlessly. Then Google services began to wobble, and within hours it became clear that this was not a platform problem but an infrastructure one. Cloudflare, one of the silent giants holding up the modern internet, was slipping. And when Cloudflare slips, markets don’t just slow down — they lose their footing.

This wasn’t happening in a vacuum. The Bank of Japan had just delivered a long-anticipated rate hike, jolting currency markets awake and pulling liquidity out of risky assets like a tide reversing direction. It was triple witching day, when derivatives expire and volatility is already baked into the air. Traders were alert, hands hovering over keyboards, risk managers watching margins with narrowed eyes. Then the screens went blank.

What unfolded next exposed something uncomfortable about the way global finance now breathes through the internet’s lungs.

Cloudflare eventually described the incident as “intermittent network performance issues,” which is a bit like calling a heart arrhythmia a mild flutter. This wasn’t a clean outage. Static pages often loaded fine, giving the illusion that everything was normal. But anything dynamic — trading terminals, APIs, authentication flows — began failing unpredictably. Internal server errors. Timeouts. Containers that simply refused to spin up. A classic grey failure: the kind that slips past alarms while quietly breaking everything that matters.

For a trader trying to close a leveraged position, this distinction is cruel. Prices were moving. Margin calls were firing. But the “close trade” button might as well have been painted on the screen.

By the time Cloudflare acknowledged the scope of the issue, the damage was already spreading. Edge compute resources were struggling to acquire containers, a technical phrase that sounds harmless until you understand what it means. In modern cloud infrastructure, every request needs a tiny execution environment. When those environments can’t be provisioned fast enough, requests don’t queue politely. They die. And when millions of automated systems retry at once, the failure amplifies itself, like a microphone too close to a speaker.

Confusion deepened because Google Cloud services were also showing stress. Finger-pointing became impossible. This is the dark secret of today’s internet: the biggest players are so tightly interwoven that causality blurs. A stumble in one control plane can feel like a coordinated failure everywhere else. For brokers trying to explain downtime to furious clients, “it wasn’t us” is not a satisfying answer.

The timing could not have been worse.

As the yen strengthened and carry trades began to unwind, risk assets sold off sharply. Bitcoin slid, equities wobbled, volatility spiked exactly as the playbook predicts. But execution — the sacred act of turning intent into action — was suddenly unavailable. Traders couldn’t top up accounts. They couldn’t hedge. They couldn’t even see accurate balances. Automated liquidation engines, running on more robust internal rails, did what they’re designed to do once connectivity returned: they liquidated mercilessly, often into thin order books hollowed out by hours of forced absence.

In India, the impact was visceral. Zerodha, Groww, Angel One — household names for retail traders — went dark during active market hours. Millions of users stared at frozen apps. Zerodha attempted to activate a WhatsApp-based backup trading channel, a clever idea that collapsed under a simpler truth: most users didn’t know it existed. Redundancy without education is theater. Nithin Kamath’s public apology carried an edge of warning when he reminded users that a single company now touches a quarter of global internet traffic.

In the CFD and FX world, where leverage is high and patience is nonexistent, the outage cut even deeper. Brokers like Skilling, FXPro, Monaxa and others displayed generic Cloudflare error pages, an almost insulting sight for clients risking real money. Industry estimates later suggested that around $1.6 billion in trading volume evaporated into the void. Not lost in price movement — lost because trades never happened.

The United States fared better on the surface, but even there cracks showed. Schwab, Vanguard, Fidelity all reported issues. Whether Cloudflare was the direct culprit or just part of a broader degradation hardly mattered. The perception was enough. Confidence, once shaken, doesn’t wait for root cause analysis.

Crypto, ironically, suffered one of its most revealing moments. A system built on the promise of decentralization froze because its front doors were centralized. Coinbase users couldn’t log in. Binance and OKX APIs hiccupped, forcing market-making bots offline and draining liquidity from order books. DeFi protocols kept running on-chain, but their web interfaces failed to load. Smart contracts were alive; humans were locked out.

It was a reminder nobody in crypto likes to dwell on: most users don’t interact with blockchains, they interact with websites. And those websites sit on the same brittle Web2 scaffolding as everything else.

Security risks bloomed in the chaos. Outages are fertile ground for scammers, and 2025 has already been a banner year for crypto theft, much of it tied to North Korean state actors. When official support channels go silent, fake ones rush in. The December 19 disruption likely gave phishing networks hours of uncontested opportunity while legitimate teams scrambled just to restore service.

Regulators were watching all of this with growing impatience. In Europe, DORA has turned operational resilience from a technical concern into a legal obligation. “Our CDN was down” no longer passes as an excuse. In the UK, even the FCA felt the tremor, ensuring the issue will stay uncomfortably close to the top of the agenda. For companies like Coinbase, already locked in legal battles over market structure and jurisdiction, recurring outages quietly erode the claim of being the safer, regulated alternative.

What makes December 19 unsettling is not just the scale of disruption, but the pattern it completes. Three significant Cloudflare incidents in just over a month, each different in nature, each harder to predict. A control-plane bug in November. A WAF logic failure in early December. Then this — a resource orchestration breakdown at the edge. Complexity is piling up faster than resilience.

The internet is growing, but not gently. AI agents, crawlers, automated systems now generate traffic with a velocity and persistence humans never could. Defenses designed to manage that load are themselves becoming sources of fragility. It’s like reinforcing a bridge until it collapses under its own weight.

The lesson here is not that Cloudflare failed. It’s that the system did exactly what it was designed to do, and that design is no longer enough.

Financial firms will talk more seriously about multi-CDN strategies, about bypass paths for critical execution flows, about paying the cost of redundancy upfront instead of in reputational damage later. Crypto projects will revisit decentralized frontends and light clients, not as ideals but as survival tools. Regulators will start asking harder questions, and this time they’ll expect real answers.

December 19, 2025 will be remembered not as a single bad day, but as a diagnostic moment. A stress test the global digital-financial machine barely survived. The takeaway is uncomfortable but clear: in a world this interconnected, resilience isn’t about preventing failure anymore. It’s about assuming failure, and designing systems that don’t freeze when the internet forgets how to breathe.

The full sequence of events is best understood visually. The infographic below lays out how a brief infrastructure disruption, a major monetary policy shift, and peak market activity converged in the same window, creating friction in global trading despite systems remaining largely online. Reviewing it provides a clearer sense of how timing, rather than duration, shaped the impact of that day.

Post Comment